It’s the end of an era! From May 2015 through October 2019, Cogmind used the same architecture for score uploading and leaderboard compilation that I’d patched together a few months before releasing the first alpha. That’s all changing as of Beta 9 and the introduction of a revamped “Ultimate Roguelike Morgue File,” so I thought I’d use this opportunity to share a rundown of how the old system worked before diving into the new one.

I must admit the old system was pretty dumb. I’m generally a proponent of brute forcing things using just what I know or can easily figure out and taking the simplest possible approach unless that really won’t work, and it happened to more or less work here, so I took the path of least resistance and it stuck that way for years.

Being terrible at web dev and and having trouble wrapping my head around networking and databases and whatnot, for this period I opted for a human-in-the-middle approach that can be summarized as:

- Copy scoresheets submitted by newly completed runs from the temporary directory to where they’ll be referenced by the leaderboards (the earliest versions of the leaderboards only offered name, location, and score, but this was expanded to link directly to the full scoresheet)

- Download all the new scoresheets to my local machine

- Run analyze_scores.exe to read in all the scoresheets and output the HTML data for the leaderboards

- Upload the latest leaderboards HTML file

I’m sure some combination of scripts could’ve handled this process automatically (and I did investigate and consider the possibilities), but I also justified keeping this workflow for years because having me in the middle for less than a minute each day at least allowed (forced) me to keep an eye on progress like how many runs were being completed each day, while also remaining vigilant in case something went wrong (this happened several times over the years as occasional bugs popped up, and I was usually able to respond before anyone else saw them because anything out of the ordinary had to make it past me first :P).

So literally once every day for 1,620 days I did the file copying and ran analyze_scores.exe on the data to produce the latest leaderboards. There were a couple days where I forgot or wasn’t able to update on time, but they were few and far in between.

I appreciate the many offers of help I got over the years (since a lot of experienced web devs happen to play Cogmind :P), but I wasn’t ready to take on help in this area when there was clearly lots more development to happen and changes to be made, and making these modifications alongside someone else would significantly slow the process.

I wanted to wait until closer to the end of beta when the scoring data was more stable and the format was finalized before doing something about it. “Doing something” being automating the process and making the whole architecture a lot more robust. For me, if long-term efficiency is the goal this sort of thing is best done once the specifications are clear and we’re past the point of making sweeping changes.

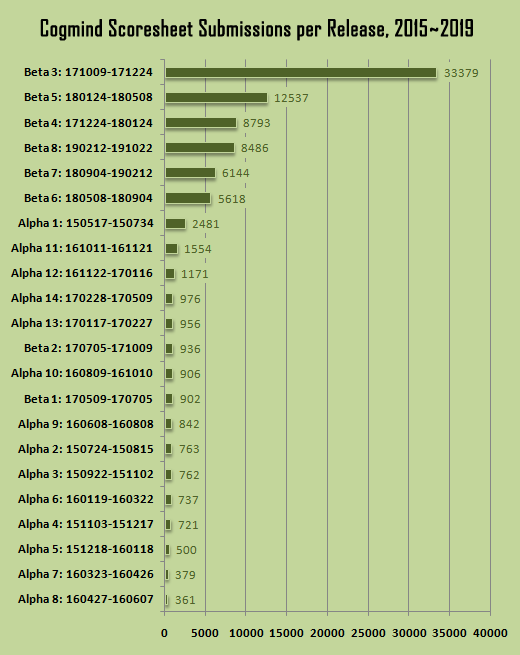

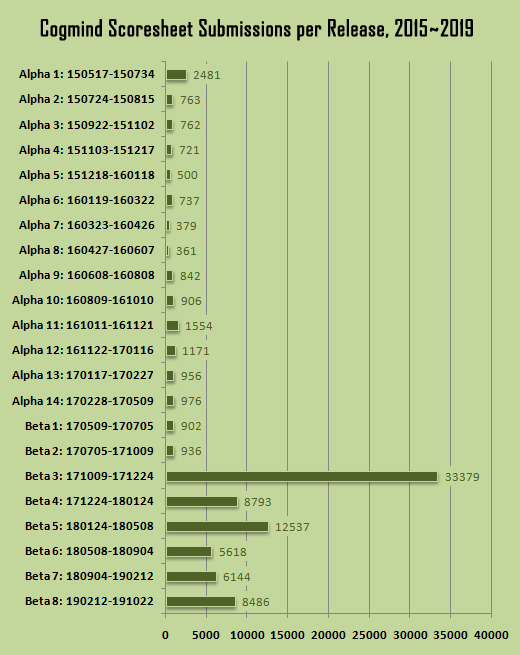

So Many Scoresheets

Over the years using this method we accumulated 89,904 scoresheets, a total 1.0 GB of raw text data.

Organizing the data chronologically probably tells a better story…

A number of factors affected uploads over the years and across different versions, contributing to a lower number of scoresheets than we’d have otherwise. Here are some of the more significant ones:

- There were a few weeks in early alpha where I’d forgotten to actually save the scoresheets to my own records before wiping the server data for a new release, so while those leaderboards are still available, the underlying scoresheets have been lost to time.

- We lost maybe a couple thousand runs shortly after the Steam launch in 2017 with Beta 3. Here I learned that storing this many individual text files on a web server is not something you’re really supposed to do, because they generally have a limit of 10k files in a single directory xD. I ended up having to quickly patch in support for multiple subdirectories, although this only affected by own analysis and data organization, not the game itself which could continue uploading to the same temp directory.

- Score submissions were originally opt-in throughout Alpha, then later changed to opt-out when we joined Steam (to collect data from a wider group of players and get a clearer picture of the real audience), but then not long after we had to switch back to opt-in again starting with Beta 6 due to the new GDPR concerns.

- The other reason behind the large drop heading into Beta 6 is that uploads were previously accepted from anonymous players, a practice that also ended with GDPR. Of all the scoresheets collected, 37,547 were anonymous (41.8%), though normally anonymous uploads accounted for two-thirds of the total within a given release, so if we were still accepting them now I’d expect to have about three times as many submissions from recent versions as we do now.

- Earlier this year during Beta 8 the server went down for a couple weeks and wasn’t accepting score submissions, and even after being fixed there was still an issue that prevented some scores from uploading.

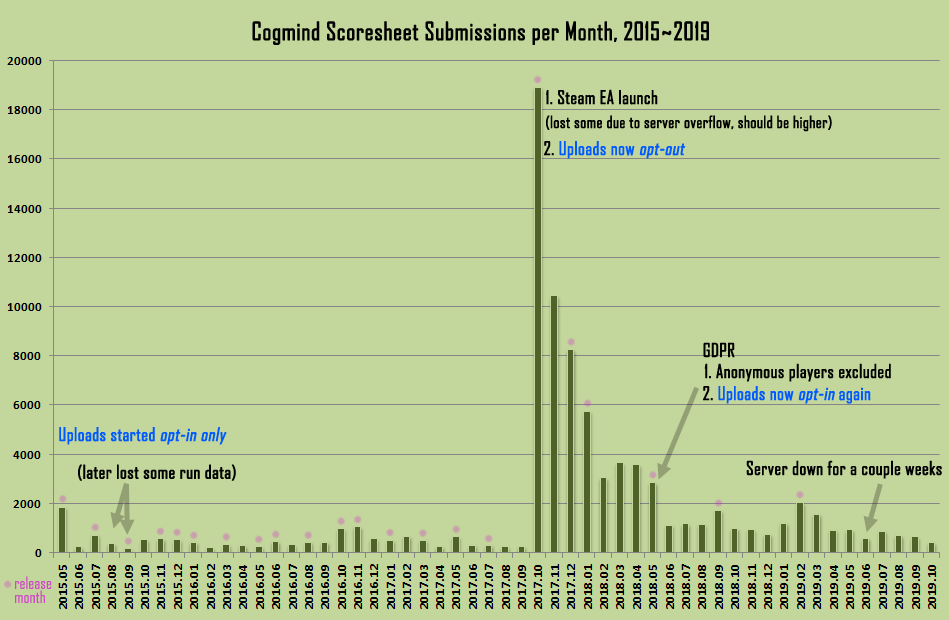

In terms of getting a clearer picture of the changes over time, even the chronological graph is somewhat distorted because it’s built on a per-release basis, even though some versions lasted only a month, while others persisted for several months or more. (Leaderboards are reset with each new release, rather than on a set time schedule.) Here I’ve compiled the monthwise scoresheet data, and annotated it with the above factors:

The compound effect of launching on Steam while also changing all new installs to upload stats by default really blew up the data, and it was interesting to collect and analyze some aggregate player metrics.

Of course the reverse happened half a year later upon switching back to opt-in and ignoring data from anonymous players. It’s true we could legally continue uploading anonymous data if it removes all unique identifiers completely, but to me data in that form is a lot less useful anyway, so for now I figure it’s best to just ignore it all rather than bloat the data set. Maybe later…

Uploading Files with SDL_net

On the technical side of things, how did these scoresheets actually get on the server in the first place? Again this was a big hurdle for me since for some reason this sort of stuff feels like black magic, but at least I found some resources online and was able to slowly patch together something that eventually worked.

Cogmind uses SDL (1.2.14), so when possible I tend to use SDL libraries for features I need. In this case SDL_net is the networking library so I added that in early 2015.

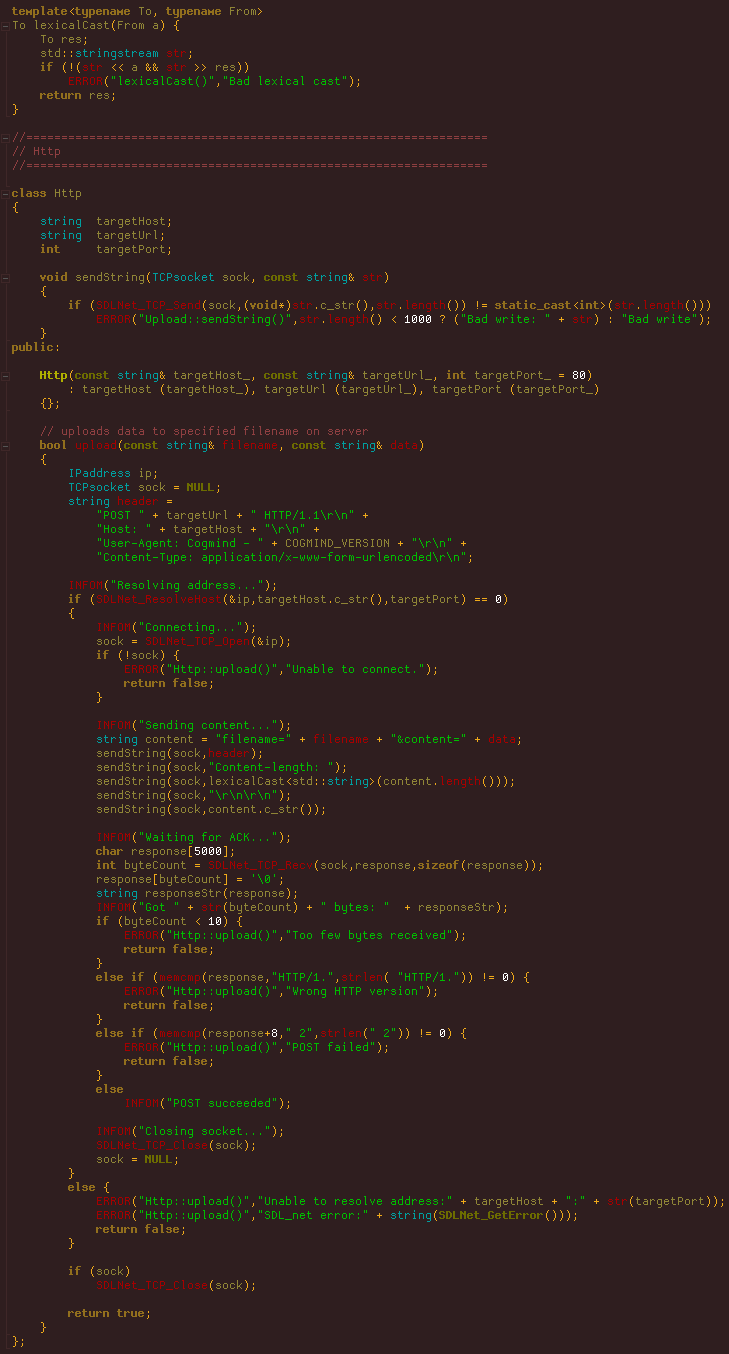

The solution for uploading a file ends up being pretty simple (once you have the answer xD):

The basics for getting a file uploading via SLD_net. (You can download this as a text file here.)

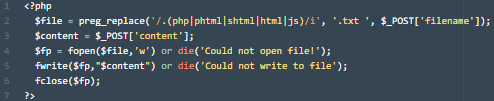

That’s on the game side, but the server also needs a way to actually create the destination file itself and write the content to it, which is handled by a little PHP file targeted by the upload.

All that’s required on the server to accept the text data and use it to create a file locally (source, the extension of which would need to renamed).

And that’s it, just drop the PHP file in the target directory, and from the game call something like this:

Http connection(“www.gridsagegames.com”,”/cogmind/temp/scores/upload.php”);

bool uploadSuccessful = connection.upload(“filename_here.txt”,DATA_GOES_HERE);

This process ideally needs to be run in a separate thread to keep it from hanging the rest of the game in case the connection is slow or has other issues.

So there you have it, that’s how nearly 90,000 text files made their way to the server from 2015 to 2019!

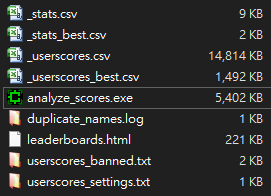

analyze_scores.exe

The analyze_scores program is nothing to be proud of, that’s for sure. It was technically implemented within Cogmind itself as a special mode, making it easier to reference all the game data, structures, enums, and constants as necessary. Of course it wasn’t integrated throughout the game, just sitting there in its own file waiting to be ripped out one day.

By the time it was removed for Beta 9, it had bloated its way to over 6,000 lines, including lots of legacy code, unused features, bad organization, and confusing naming… basically a complete mess. I never bothered refactoring or designing it well in the first place because I knew it was going to be entirely replaced later on, though I guess at the time I didn’t realize “later on” would come over four years later, and in the meantime whenever I needed to go back and make modifications or additions it was a real headache to even make sense of whether I was doing it right or sometimes even what I was looking at.

Anyway, bad code. Good riddance.

By comparison the recently implemented replacement is both elegant and easy to follow, with all static data properly condensed into an external text file. I’ll be talking about that and more in a followup article (now available here).

Since I was stuck with it for a while but really didn’t want to actually rewrite the thing, an increasing number of features were just tacked on in place, some of the more notable ones including:

- An external settings file. This was added so I didn’t have to actually recompile whenever I wanted to make minor changes to the program’s behavior.

- A banned player list. I eventually needed a way to ban players by ID, so there was a text file against which the files were checked before analyzing any player data.

- A file of alternate names used by a single player ID. I didn’t really need a file for this, but was mainly curious and added it anyway when finally implementing the ability for the leaderboards to detect and rank players by ID rather than name. The earliest iterations were based purely on names, which meant players could easily spoof other players, or individual players could easily occupy multiple places on the leaderboard by simply changing their name. Not very many players actually changed their name, and messing with the integrity of the leaderboards isn’t a big worry with a community like Cogmind’s, but it’s better to head off the inevitable.

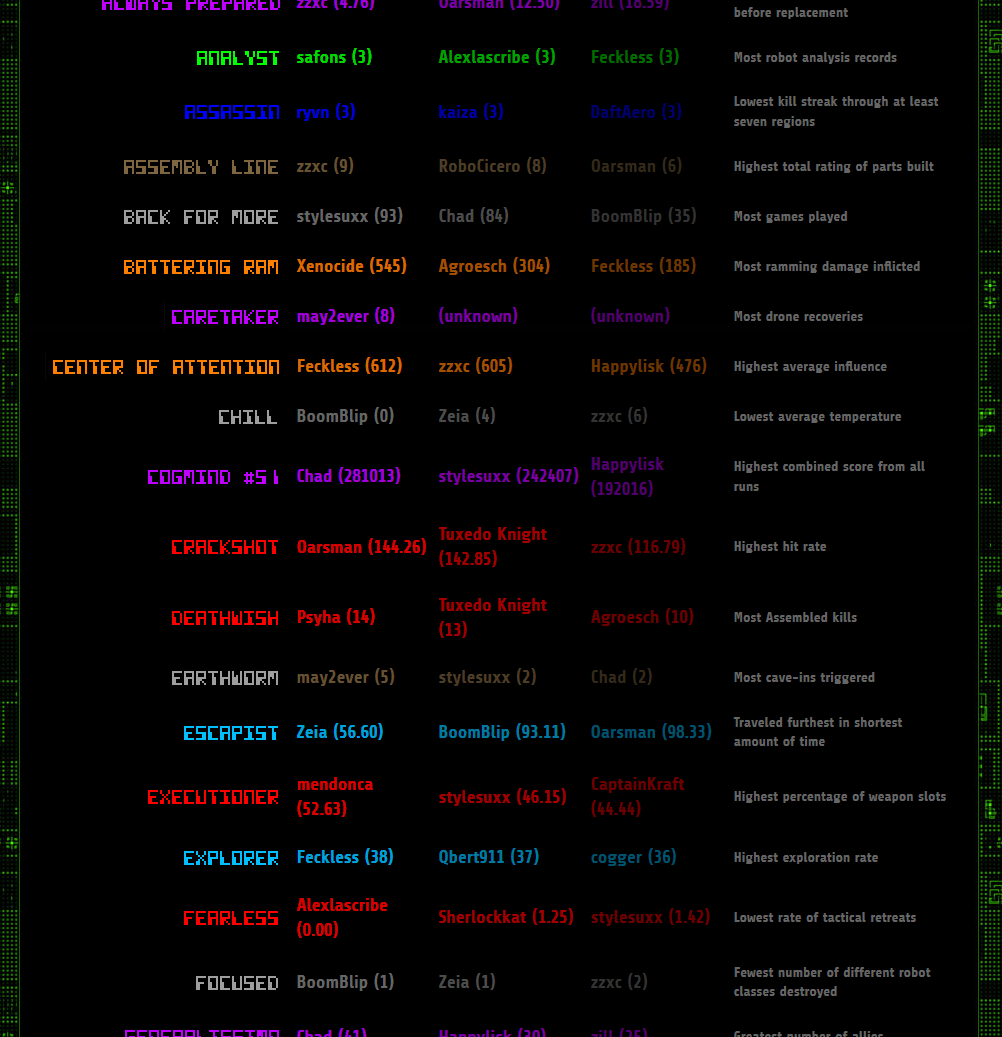

A big part of the early bloat came from the “Alpha Challenge 2015” tournament/event. For that I put together pretty broad-ranging “achievement” ranking system based on scoresheet data, so in addition to regular score-based leaderboards, players were also ranked in 66 different categories.

Sample achievement results from AC2015. At first not all of the achievements were publicly defined beyond showing their name, so players weren’t entirely sure how or what they were calculating until the event’s conclusion, which helped prevent players from gaming the system which would’ve been easy (and therefore boring and unfair). Plus it was fun for players to guess what some of them might mean as they followed the event’s progress :D

I wasn’t sure whether I’d do another tournament like that, but I definitely wanted to, so the code stayed and it just sat there wasting time and space…

It was a lot of fun and I’d always looked forward to doing it again after first holding a similar mini-tournament in 2012 with Cogmind 7DRL, though in both cases we had a relatively small group of players so it was easier to manage, and “benefited from” no one being particular good at the game yet. Although I’d like to hold more competitions, there are two main roadblocks:

- A lot of the same people would win anyway--you can almost guess who they’ll be :P

- It’s not feasible to combat cheating, and there are enough players now that it would be a concern (especially if I offered real prizes, which I’d love to do as before).

There are some ways around these roadblocks, but various solutions comes with their own issues.

Anyway, back to analyze_scores.exe, it outputs a lot more than just leaderboards!

Aside from the HTML file dropped onto the server, it also generates the materials to facilitate… you guessed it, analysis.

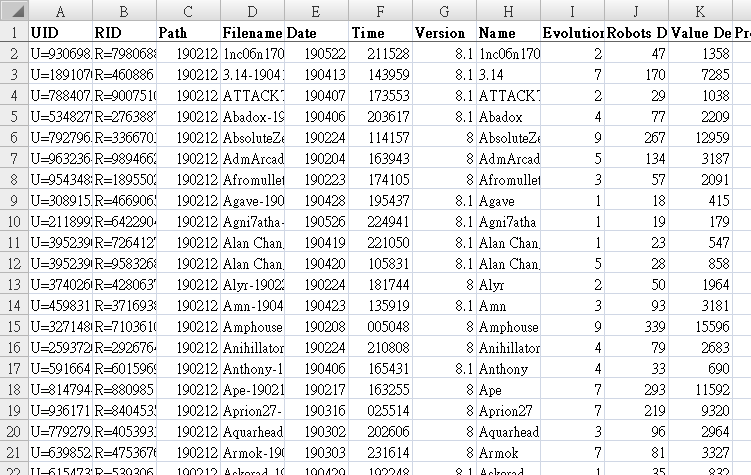

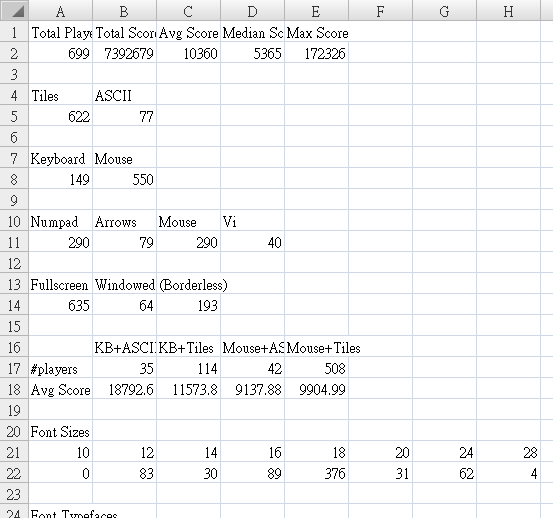

The userscores.csv file contains the complete contents of all submitted scoresheets for a given period, making it easy to examine the data in a single spreadsheet. There’s also the _best version containing each player’s best run so that each player only occupies one row, useful for different kinds of analysis.

Two other CSV files contain precalculated stats based on userscores data, organizing select factors that I wanted to keep an eye on across versions, and for easy conversion into graphs.

Sample _stats_best.csv data. “Best” stats are useful for exploring user preferences, since there’ll be only one set of data per player.

Although the userscores and stats analysis system were originally created for the tournament back during Alpha 3, it wasn’t until later starting with Alpha 8 that I decided to report on stats with each new major release. This is useful for keeping an eye on the general status of the community, and more specifically how recent changes have affected the game (and how players are interacting with them). Plus some players just enjoy having access to this information as well.

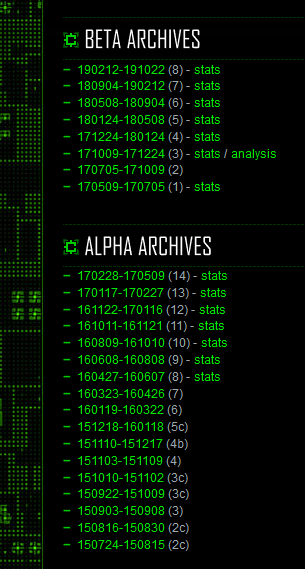

Stats have been published 14 separate times so far, all of them in this thread on the forums.

History stats as linked from the leaderboards alongside the relevant period’s scoresheet archives.

I’m not sure how we’ll be doing stat analysis in future versions since analyze_scores is no longer a thing under the new system, but since from here on out we’ll have all the scoresheets collected in a brand new protobuf format rather than a trove of text files, it shouldn’t be too much trouble since presumably there is software out there we can use to run the analysis on that collection of data. More on that later!

Update 191226: The followup article covering the new system has been published, see “Leaderboards and Player Stats with Protobufs.”